The Dirty Secrets about Monitor Calibration

You may think, you should buy a probe to calibrate your display. There are several products to buy around 100$ -1000$ with some software included. But what you get, is not only a fake, you may get worse results then it defaulted from the factory, especially when the monitor is more professional it is high risk to change from reference quality to class 3 or worse.

So why does this happen and why many users do not realize this?

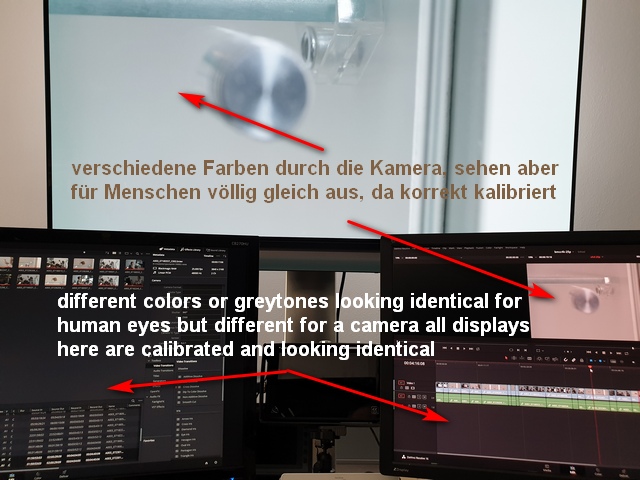

Most cheap probes are colorimeters. Those are very similar to camera sensors based on RGB color filters. You may have recognized, that camera sensors often see colors from different monitors different even if they look identical to you. The reason is: different monitors have different spectral properties. To describe it simply: you are measuring wrong values. Additional to that, cheap probes are not temperature stable. If you try to measure several thousands of colors, the probes will warm up by the display's energy. So if you measure the same color after e.g. 30 or 120 min again, you will measure different values. Also most importantly for generating 3D LUTs: cheap colorimeters are not able to measure deep blacks correctly, they are especially useless for OLEDs!

Another big fake is, that most simple calibration software does not give you the ability to set up the target gamut. So you do not realize, that you measure a P3 gamut but you may want to work with REC709. After the "calibration" you are still in the gamut of the display/or used display preset e.g. laptop displays from apple are not sRGB/REC709, but your target audience is YouTube then you need REC709 and not P3.

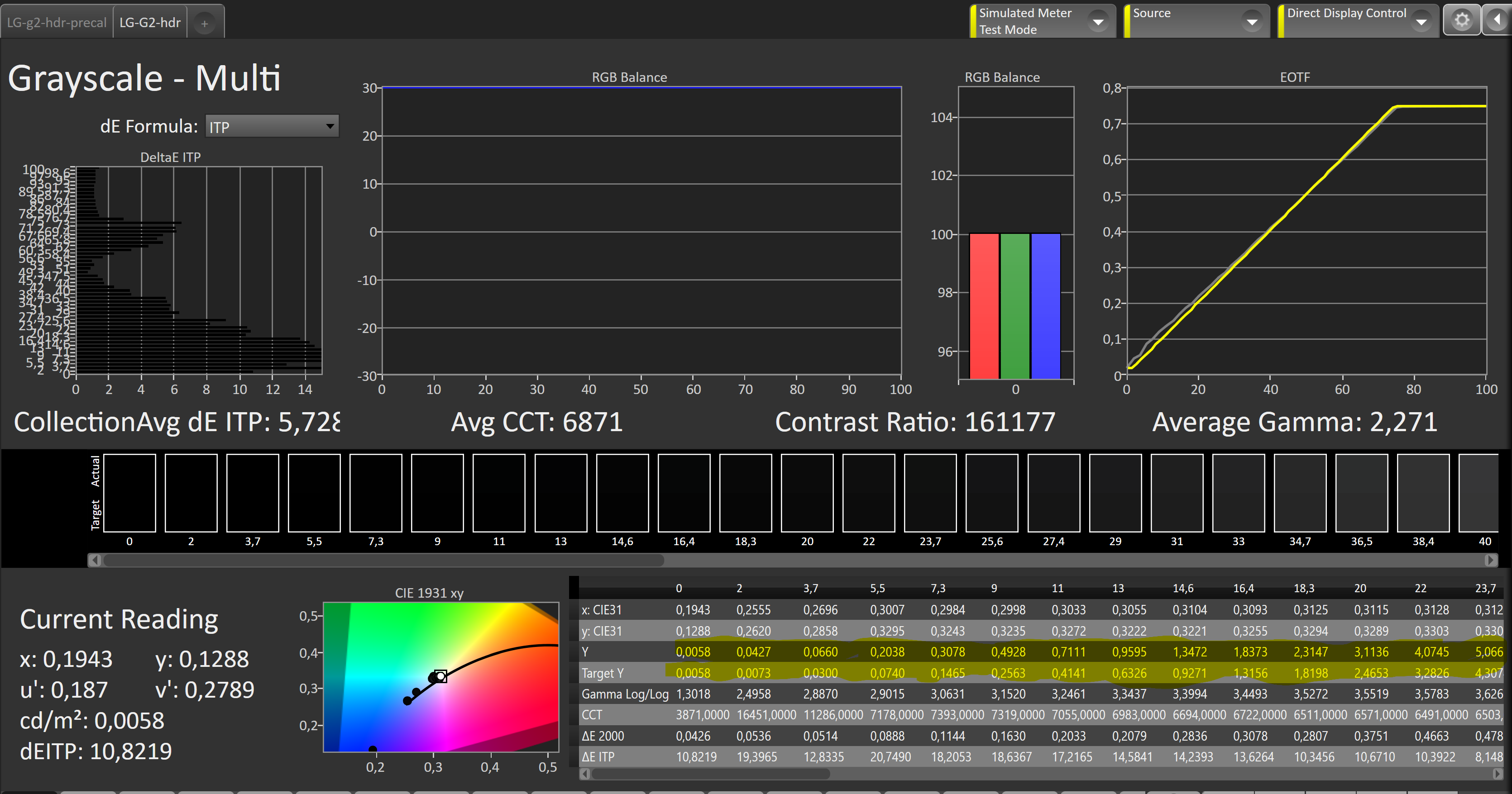

The next problem with such cheap probes is if you want to calibrate HDR, they are simply not sensitive enough for calibrating dark areas up to 10% of the video level. This area is huge of details, and you can see many youtube video reviews where they complain that LG OLEDs are not able to show these areas properly, especially compared to high-end reference monitors like Sony's BMV HX310 and similar.

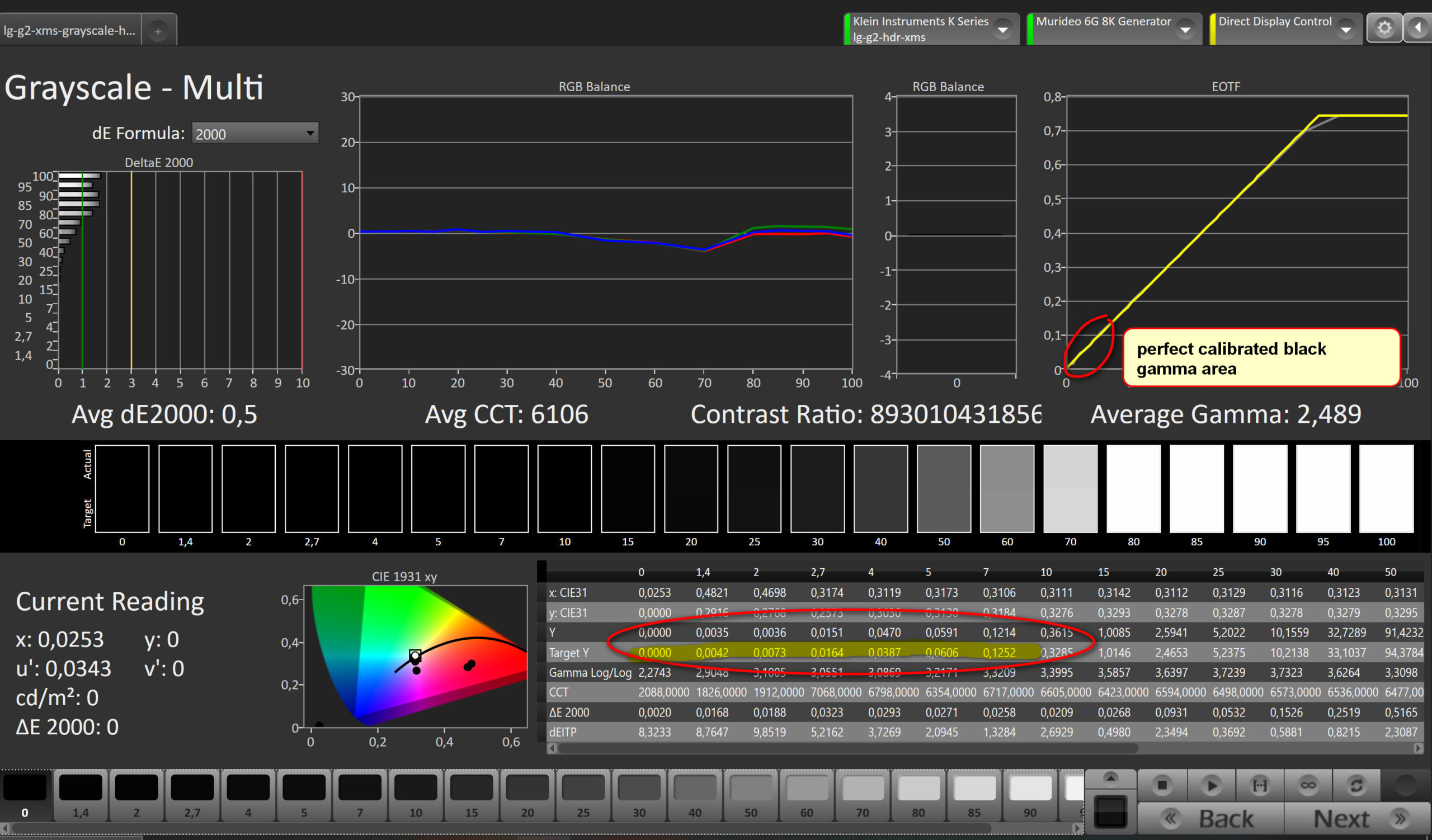

However, in the screenshot you can see how a high-end probe correctly calibrated the range from 0 to 10% with luminance values from 0.0000 cd/m2 to 0.1 cd/m2, cheap probes can't measure anything below 0.1 at all and up to 1 cd/m2 they are very inaccurate and noise too much. High-end probes can measure dramatically more sensitive by a factor of 1000.

If you look at the "target y" and "y" values you can see that up to 10% of the video levels the values are below 0.1 cd/m2. A probe like i1 display pro is not able to measure below 0.1 cd/m2! A cheap probe like i1 display pro or Spyder is not able to measure below 0.1 cd/m2 (there is then no changed measured value up to 10% signal level and the cheap measuring program variants measure also only 0% and then 10% in steps of 10 up to 100%, we measure in 8 steps alone below 10% as seen here! And with higher values it is like with cameras too, the sensors then first make a lot of noise and don't give a stable signal, with cheap probes so up to approx. 20-25% signal level or about 5 nits depending on SDR or HDR... the above measurement image comes from a professional colorimeter Klein K10a that is also able to calibrate the black area correctly and to be able to measure accurately without noise at all. Here you can see how well the LG OLED can be calibrated in HDR especially in the blacks. The widespread myth that the LG does not have correct black levels is only due to the widespread bad measuring probes. Many color graders who don't buy a 25,000 euro monitor don't buy a 25,000 euro calibration equipment. Even the fewest who buy a Sony BVM HX310 or similar do so.

Uncalibrated poor black levels especially in HDR can only be measured at all with high quality probes like Klein K10a.

If you buy monitors with specs like 100% REC709, does this mean, it is a perfect display?

In most cases not. Many displays meet the limits of the colorspace gamut like REC709, which means, they hit 100% of the gamut. But that does not mean, all colors inside of the gamut are located at the right position. Your cheap calibration software mostly only calibrates 2 dimensional and only the white point. But this most of the time does not correct many other wrong color locations. And if only the white point will be corrected, then the limits of the colorspace may still be wrong, like described with the laptop displays.

Do not waste money and time

I can tell you this story because I myself bought all cheap probes and many new generations of them until I realized what is not working with them and why it does not work out. It took years of research and learning curve and many thousands of bugs to get here. Professional calibration stuff will cost around 25.000$ to deliver reliable results. I have many customers, who have bought something from Xrite or Data Color and realized that they could not match different monitors like there iMac Display to the Video Monitor. Try it yourself, but you will waste your money.

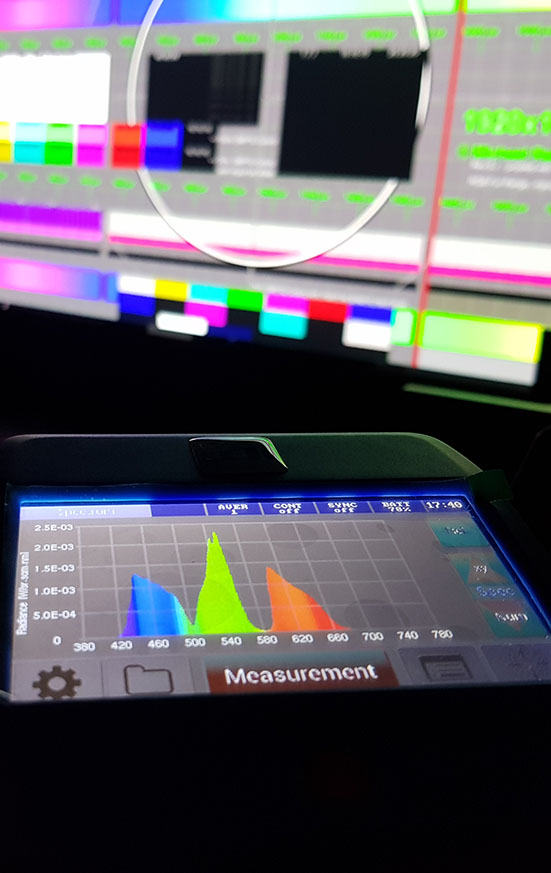

Spectroradiometer like the JETI 1511

Only high-end Spectroradiometers with good spectral resolutions are able to correct the colorimeter's bad color recognition of different spectral properties from different display technologies (like OLED, PLASMA, LCD, etc.), but you need to do this every time for every display... If you buy cheap probes with better software and calibration presets for different display types, you will still fail if you want to achieve reference quality or if you want to match different displays (like reference vs client monitors). Cheap spectroradiometers like i1Pro do have not enough resolution to be exact enough for reference quality, you are at least Delta E2000 of 0.5 to over 1.0 away from the correct color. A good reference display has an average Delta E2000 of only 0.5 ot 0.75. If you add those errors oft a cheap spectro you end up with a Delta E2000 of 1.o to 2.0, which means you lost reference quality.

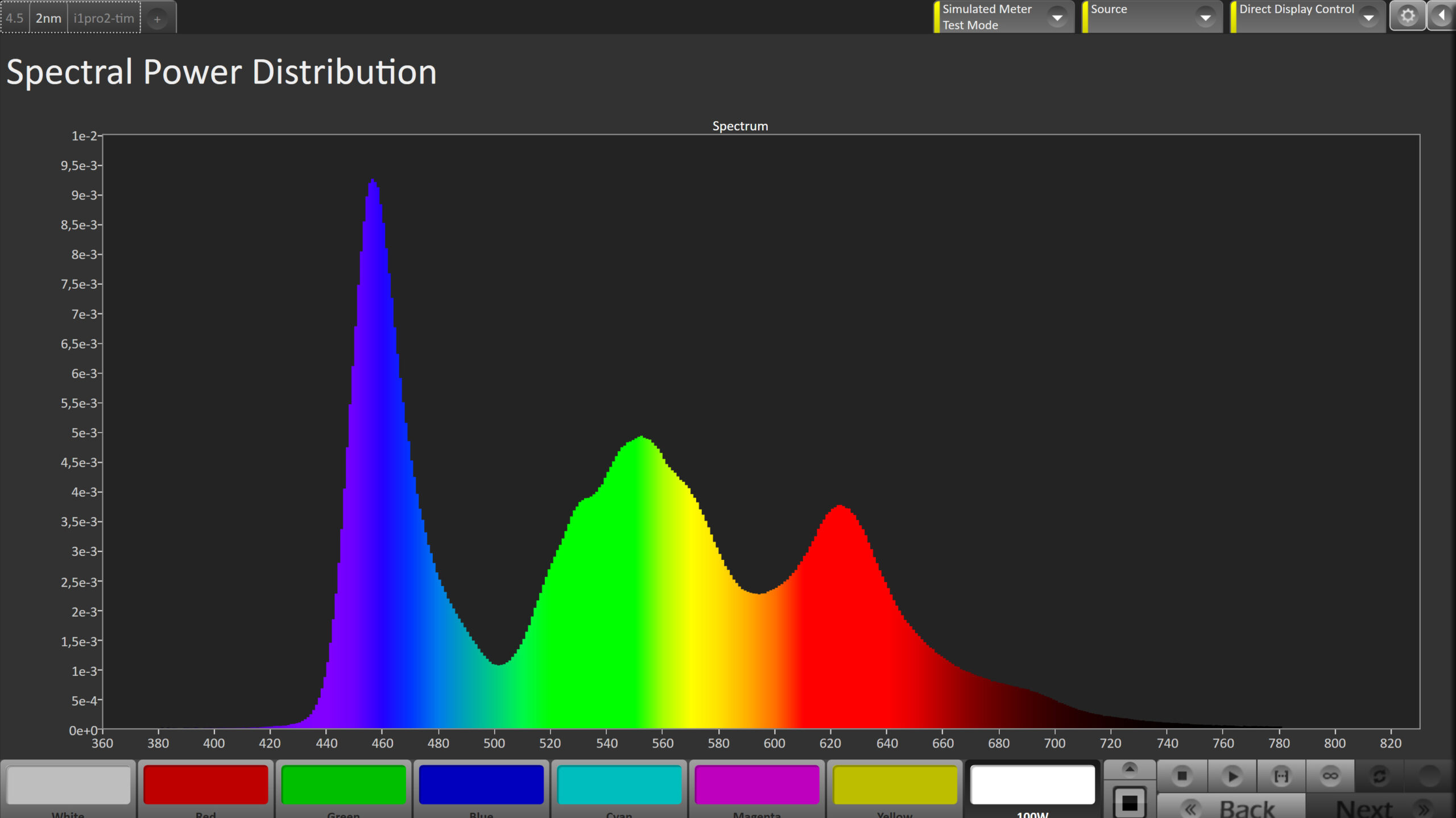

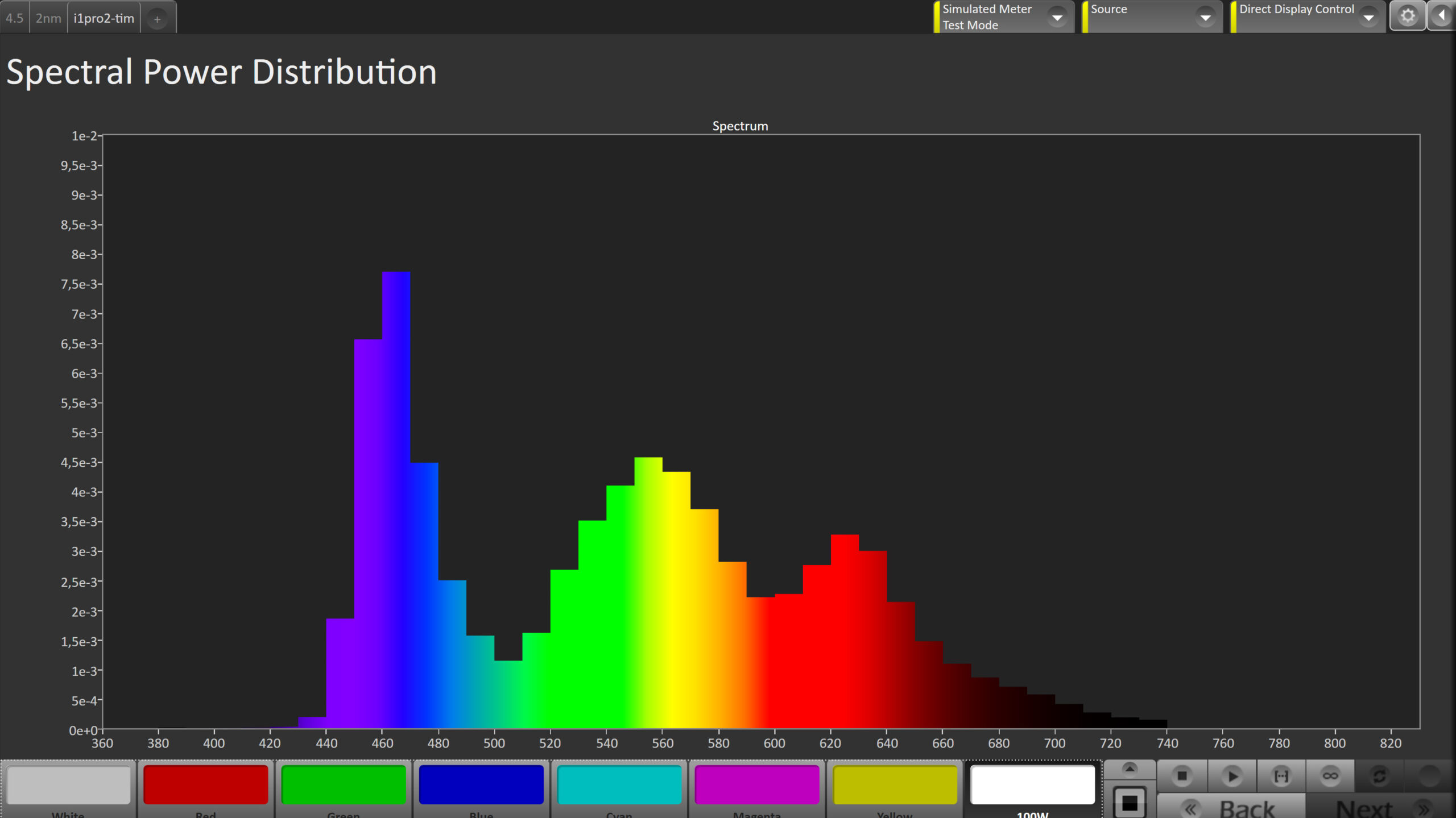

High-resolution Jeti 1511 Spectroradiometer with true 2nm resolution

Xrite i1Pro2 or Pro3 only have a resolution of only 10 nm (nanometer) which is much too low. This will not be better even if your open source calibration software tries to fake with software settings higher resolution of this cheap spectro.